|

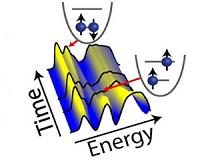

Cambridge, MA (SPX) Mar 04, 2011 Quantum computers are computers that exploit the weird properties of matter at extremely small scales. Many experts believe that a full-blown quantum computer could perform calculations that would be hopelessly time consuming on classical computers, but so far, quantum computers have proven hard to build. At the Association for Computing Machinery's 43rd Symposium on Theory of Computing in June, associate professor of computer science Scott Aaronson and his graduate student Alex Arkhipov will present a paper describing an experiment that, if it worked, would offer strong evidence that quantum computers can do things that classical computers can't. Although building the experimental apparatus would be difficult, it shouldn't be as difficult as building a fully functional quantum computer. Aaronson and Arkhipov's proposal is a variation on an experiment conducted by physicists at the University of Rochester in 1987, which relied on a device called a beam splitter, which takes an incoming beam of light and splits it into two beams traveling in different directions. The Rochester researchers demonstrated that if two identical light particles - photons - reach the beam splitter at exactly the same time, they will both go either right or left; they won't take different paths. It's another quantum behavior of fundamental particles that defies our physical intuitions. The MIT researchers' experiment would use a larger number of photons, which would pass through a network of beam splitters and eventually strike photon detectors. The number of detectors would be somewhere in the vicinity of the square of the number of photons - about 36 detectors for six photons, 100 detectors for 10 photons. For any run of the MIT experiment, it would be impossible to predict how many photons would strike any given detector. But over successive runs, statistical patterns would begin to build up. In the six-photon version of the experiment, for instance, it could turn out that there's an 8 percent chance that photons will strike detectors 1, 3, 5, 7, 9 and 11, a 4 percent chance that they'll strike detectors 2, 4, 6, 8, 10 and 12, and so on, for any conceivable combination of detectors. Calculating that distribution - the likelihood of photons striking a given combination of detectors - is a hard problem. The researchers' experiment doesn't solve it outright, but every successful execution of the experiment does take a sample from the solution set. One of the key findings in Aaronson and Arkhipov's paper is that, not only is calculating the distribution a hard problem, but so is simulating the sampling of it. For an experiment with more than, say, 100 photons, it would probably be beyond the computational capacity of all the computers in the world. The question, then, is whether the experiment can be successfully executed. The Rochester researchers performed it with two photons, but getting multiple photons to arrive at a whole sequence of beam splitters at exactly the right time is more complicated. Barry Sanders, director of the University of Calgary's Institute for Quantum Information Science, points out that in 1987, when the Rochester researchers performed their initial experiment, they were using lasers mounted on lab tables and getting photons to arrive at the beam splitter simultaneously by sending them down fiber-optic cables of different lengths. But recent years have seen the advent of optical chips, in which all the optical components are etched into a silicon substrate, which makes it much easier to control the photons' trajectories. The biggest problem, Sanders believes, is generating individual photons at predictable enough intervals to synchronize their arrival at the beam splitters. "People have been working on it for a decade, making great things," Sanders says. "But getting a train of single photons is still a challenge." Sanders points out that even if the problem of getting single photons onto the chip is solved, photon detectors still have inefficiencies that could make their measurements inexact: in engineering parlance, there would be noise in the system. But Aaronson says that he and Arkhipov explicitly consider the question of whether simulating even a noisy version of their optical experiment would be an intractably hard problem. Although they were unable to prove that it was, Aaronson says that "most of our paper is devoted to giving evidence that the answer to that is yes." He's hopeful that a proof is forthcoming, whether from his research group or others'.

Share This Article With Planet Earth

Related Links Massachusetts Institute of Technology Computer Chip Architecture, Technology and Manufacture Nano Technology News From SpaceMart.com

Direct electronic readout of 'Artificial atoms'

Direct electronic readout of 'Artificial atoms'Bochum, Germany (SPX) Mar 01, 2011 Bochum physicists are constructing 0-dimensional systems Through his participation, the research team from Bochum, Duisburg-Essen, and Hamburg now has succeeded in an energy-state occupancy readout of those artificial atoms - using common interfaces to classic computers. This is a big step towards the application of such systems. They report about their findings in Nature Communications. ... read more |

|

| The content herein, unless otherwise known to be public domain, are Copyright 1995-2010 - SpaceDaily. AFP and UPI Wire Stories are copyright Agence France-Presse and United Press International. ESA Portal Reports are copyright European Space Agency. All NASA sourced material is public domain. Additional copyrights may apply in whole or part to other bona fide parties. Advertising does not imply endorsement,agreement or approval of any opinions, statements or information provided by SpaceDaily on any Web page published or hosted by SpaceDaily. Privacy Statement |